Short answer: Yes, it does. Long answer:

When you create or update statistics (without specifying a sample rate) the sample rate is automatically calculated by SQL Server.

If you would want this statistic (or the whole table) to use a Flat sample rate, say 5 percent, then you could specify a persisting sample rate after updating your stats.

UPDATE STATISTICS dbo.test2 WITH SAMPLE 5 PERCENT, PERSIST_SAMPLE_PERCENT = ON

UPDATE STATISTICS dbo.test2 WITH FULLSCAN, PERSIST_SAMPLE_PERCENT = ON

(I would not advise changing this to fullscan on big tables)

From Microsoft:

Applies to: SQL Server 2016 (13.x) (starting with SQL Server 2016 (13.x) SP1 CU4) through SQL Server 2017 (starting with SQL Server 2017 (14.x) CU1).

(https://docs.microsoft.com/en-us/sql/t-sql/statements/update-statistics-transact-sql?view=sql-server-2017)

In my example, I used the 110 compatibility level (sql server 2012)

select compatibility_level from sys.databases where name = 'test';

create table dbo.test2 (id int identity(1,1),

value varchar(50));

create index IX_value on dbo.test2(value);

set nocount on;

insert into dbo.test2 VALUES ('BlaBLa');

go 5000

Result:

Beginning execution loop

Batch execution completed 5000 times.

insert into dbo.test2

select value from dbo.test2;

go 7

Beginning execution loop

Batch execution completed 7 times.

Result: 640 000 Rows

select a.value from dbo.test2 a

inner join dbo.test2 ac

on a.id = ac.id where a.value = 'BlaBLa' or a.value = 1;

--Check the statistics

SELECT ss.name, persisted_sample_percent,

(rows_sampled * 100)/rows AS sample_percent

FROM sys.stats ss

INNER JOIN sys.stats_columns sc

ON ss.stats_id = sc.stats_id AND ss.object_id = sc.object_id

INNER JOIN sys.all_columns ac

ON ac.column_id = sc.column_id AND ac.object_id = sc.object_id

CROSS APPLY sys.dm_db_stats_properties(ss.object_id, ss.stats_id) shr

WHERE ss.[object_id] = OBJECT_ID('[dbo].[test2]');

| Name | persisted_sample_percent | name sample_percent |

| IX_value | 0 | 60 |

| _WA_Sys_00000001_5CD6CB2B | 0 | 60 |

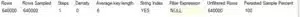

DBCC SHOW_STATISTICS(test2,IX_value)

![]()

As expected, we see no persisted sample percent, and a 60% Sample rate.

truncate table dbo.test2

set nocount on;

insert into dbo.test2 VALUES ('BlaBLa')

go 5000

UPDATE STATISTICS dbo.test2 WITH FULLSCAN, PERSIST_SAMPLE_PERCENT = ON

insert into dbo.test2

select value from dbo.test2

go 7

select a.value from dbo.test2 a

inner join dbo.test2 ac on a.id = ac.id where a.value = 'BlaBLa' or a.value = 1

--Check the statistics

SELECT ss.name, persisted_sample_percent,

(rows_sampled * 100)/rows AS sample_percent

FROM sys.stats ss

INNER JOIN sys.stats_columns sc

ON ss.stats_id = sc.stats_id AND ss.object_id = sc.object_id

INNER JOIN sys.all_columns ac

ON ac.column_id = sc.column_id AND ac.object_id = sc.object_id

CROSS APPLY sys.dm_db_stats_properties(ss.object_id, ss.stats_id) shr

WHERE ss.[object_id] = OBJECT_ID('[dbo].[test2]');

| name | persisted_sample_percent | sample_percent |

| IX_value | 100 | 100 |

| _WA_Sys_00000001_5CD6CB2B | 100 | 100 |

DBCC SHOW_STATISTICS(test2,IX_value)

UPDATE STATISTICS dbo.test2 WITH sample 70 PERCENT, PERSIST_SAMPLE_PERCENT = ON;

(redo filling of tables after)

| name | rows | rows_sampled | persisted_sample_percent | sample_percent |

| IX_value | 640000 | 445043 | 70 | 69 |

| _WA_Sys_00000001_5CD6CB2B | 640000 | 445043 | 70 | 69 |

UPDATE STATISTICS dbo.test2 WITH sample 80 PERCENT, PERSIST_SAMPLE_PERCENT = ON;

(redo filling of tables after)

| name | rows | rows_sampled | persisted_sample_percent | sample_percent |

| IX_value | 640000 | 512224 | 80 | 80 |

| _WA_Sys_00000001_5CD6CB2B | 640000 | 512224 | 80 | 80 |

UPDATE STATISTICS dbo.test2 WITH sample 1 PERCENT, PERSIST_SAMPLE_PERCENT = ON;

(this is the same on compat 140).

| name | rows | rows_sampled | persisted_sample_percent | sample_percent |

| IX_value | 640000 | 358150 | 1 | 55 |

| _WA_Sys_00000001_5CD6CB2B | 640000 | 358150 | 1 | 55 |

In short, it still works, I tried it with compatibility level 100 aswell, which also works.

Source used:

https://blogs.msdn.microsoft.com/sql_server_team/persisting-statistics-sampling-rate/

| Cookie | Duration | Description |

|---|---|---|

| ARRAffinity | session | ARRAffinity cookie is set by Azure app service, and allows the service to choose the right instance established by a user to deliver subsequent requests made by that user. |

| ARRAffinitySameSite | session | This cookie is set by Windows Azure cloud, and is used for load balancing to make sure the visitor page requests are routed to the same server in any browsing session. |

| cookielawinfo-checkbox-advertisement | 1 year | Set by the GDPR Cookie Consent plugin, this cookie records the user consent for the cookies in the "Advertisement" category. |

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| CookieLawInfoConsent | 1 year | CookieYes sets this cookie to record the default button state of the corresponding category and the status of CCPA. It works only in coordination with the primary cookie. |

| elementor | never | The website's WordPress theme uses this cookie. It allows the website owner to implement or change the website's content in real-time. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | Cloudflare set the cookie to support Cloudflare Bot Management. |

| pll_language | 1 year | Polylang sets this cookie to remember the language the user selects when returning to the website and get the language information when unavailable in another way. |

| Cookie | Duration | Description |

|---|---|---|

| _ga | 1 year 1 month 4 days | Google Analytics sets this cookie to calculate visitor, session and campaign data and track site usage for the site's analytics report. The cookie stores information anonymously and assigns a randomly generated number to recognise unique visitors. |

| _ga_* | 1 year 1 month 4 days | Google Analytics sets this cookie to store and count page views. |

| _gat_gtag_UA_* | 1 minute | Google Analytics sets this cookie to store a unique user ID. |

| _gid | 1 day | Google Analytics sets this cookie to store information on how visitors use a website while also creating an analytics report of the website's performance. Some of the collected data includes the number of visitors, their source, and the pages they visit anonymously. |

| ai_session | 30 minutes | This is a unique anonymous session identifier cookie set by Microsoft Application Insights software to gather statistical usage and telemetry data for apps built on the Azure cloud platform. |

| CONSENT | 2 years | YouTube sets this cookie via embedded YouTube videos and registers anonymous statistical data. |

| vuid | 1 year 1 month 4 days | Vimeo installs this cookie to collect tracking information by setting a unique ID to embed videos on the website. |

| Cookie | Duration | Description |

|---|---|---|

| ai_user | 1 year | Microsoft Azure sets this cookie as a unique user identifier cookie, enabling counting of the number of users accessing the application over time. |

| VISITOR_INFO1_LIVE | 5 months 27 days | YouTube sets this cookie to measure bandwidth, determining whether the user gets the new or old player interface. |

| YSC | session | Youtube sets this cookie to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the user's video preferences using embedded YouTube videos. |

| yt-remote-device-id | never | YouTube sets this cookie to store the user's video preferences using embedded YouTube videos. |

| yt.innertube::nextId | never | YouTube sets this cookie to register a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | YouTube sets this cookie to register a unique ID to store data on what videos from YouTube the user has seen. |

| Cookie | Duration | Description |

|---|---|---|

| WFESessionId | session | No description available. |