ETL processes have a lot of common parts between them. One important part of it that’s frequently repeated is a data copy sub-process to copy table(s) data from one server/database to another. Sometimes full table copies, in other cases with specific data selection. For every table or selection, you create the process in one or several packages/control flows and/or data tasks. There is not much special to say about small tables, but large tables are another matter.

Using simple data tasks can do the trick. The consequence of this is the well-known META data problem. Every time the source and/or target tables changes you need to update you package, retest it again and deploy anew. The reason for this is the META driven architecture of integrated services.

In those cases (large tables and changing objects) you have to change the package for the command line BCP tool. This will drastically improve your process time and avoids the META issue because the copy process is executed outside the SSIS environment.

And what is the problem with this? In fact, none … But there are some things that need your attention when creating these packages, such as:

As you can see a lot of extra elements that can go wrong. If you do this for a lot of tables you just have more work to do.

Creating these packages isn’t that difficult when you have some experience with SSIS. After a while the package maintenance comes up. A lot of people complain about the maintenance of these copy packages in SSIS. Depending on what you need to copy (full table copy or selective copy) there are more things that need to be changed over and over again.

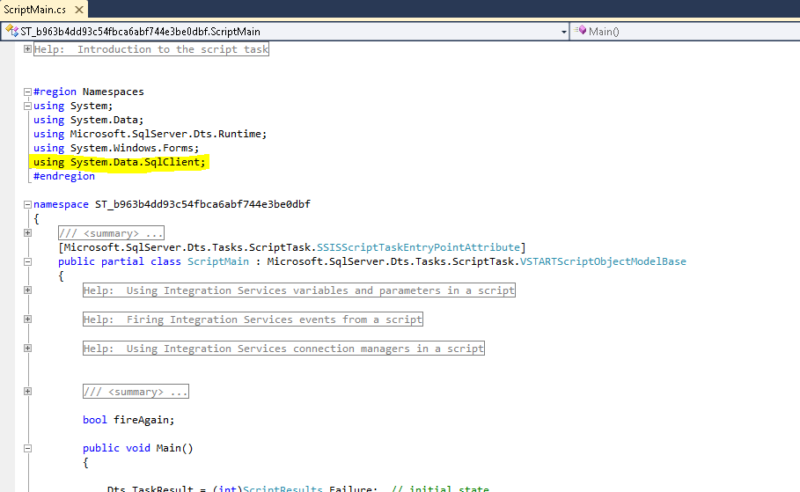

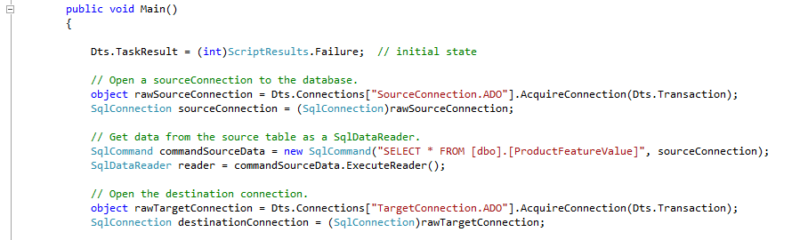

The solution for this is available within the script task. With the .NET framework (from version 2 until now) SqlBulkCopy class, you integrate the same functionality of the command line utility BCP.exe into your integrated service package.

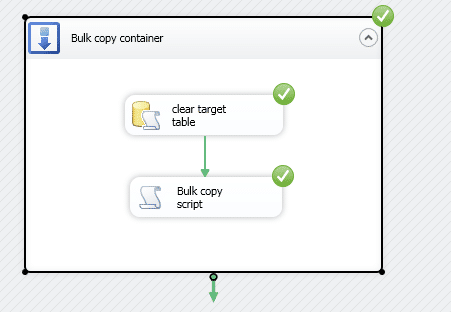

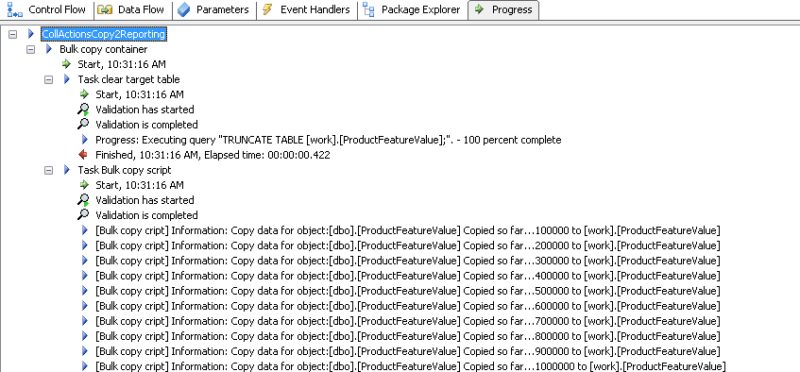

An example:

First, the execute SQL Task ‘clear target table’ clears the target table.

Secondly, the script task ‘Bulk copy script’ task, copies the data.

The notification handler

There are more possibilities and options using this class, that can improve our example. Parameterize the script to build hardcode-free code is possible of course. But this example explains the basics of this class usage.

You can find the SqlBulkCopy class documentation on msdn.

Advantages of this approach is the minimal maintenance effort on these packages, less packages and a big performance improvement. Disadvantages of using it, that depends on your knowledge level, you need some knowledge about scripting to write it. If you know a little of C#.NET, then this is a very small thing to do.

I’m not saying this is the only possibility, neither will I say that it solves all your problems by making only one package for all you copy tasks. But remember it as a possible solution for a lot of cases. Try it out for you case and see if it suits you.

Using this package solution architecture, you can make a dynamic package that copies a bunch of tables for you in no time. You can discover this in a next blog, ‘Dynamic Bulk copy SSIS package‘.

Another example I can give is a complete staging process, that processes a full or incremental load of data into the staging area of the ETL process. That will come up in another blog, ‘Dynamic Bulk copy Staging package.’

© 2022 Kohera

Crafted by

© 2022 Kohera

Crafted by