ETL processes have a lot of common parts between them. One important part of it that’s frequently repeated is a data copy sub-process to copy table(s) data from one server/database to another. Sometimes full table copies, in other cases with specific data selection. For every table or selection, you create the process in one or several packages/control flows and/or data tasks. There is not much special to say about small tables, but large tables are another matter.

Using simple data tasks can do the trick. The consequence of this is the well-known META data problem. Every time the source and/or target tables changes you need to update you package, retest it again and deploy anew. The reason for this is the META driven architecture of integrated services.

In those cases (large tables and changing objects) you have to change the package for the command line BCP tool. This will drastically improve your process time and avoids the META issue because the copy process is executed outside the SSIS environment.

And what is the problem with this? In fact, none … But there are some things that need your attention when creating these packages, such as:

As you can see a lot of extra elements that can go wrong. If you do this for a lot of tables you just have more work to do.

Creating these packages isn’t that difficult when you have some experience with SSIS. After a while the package maintenance comes up. A lot of people complain about the maintenance of these copy packages in SSIS. Depending on what you need to copy (full table copy or selective copy) there are more things that need to be changed over and over again.

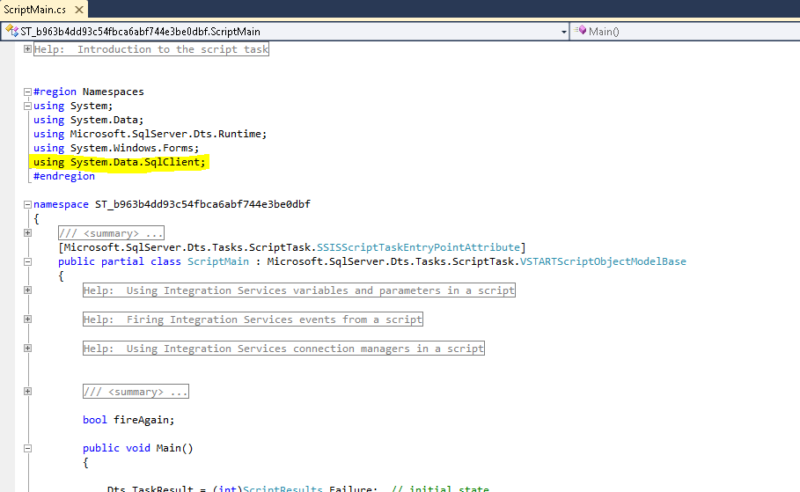

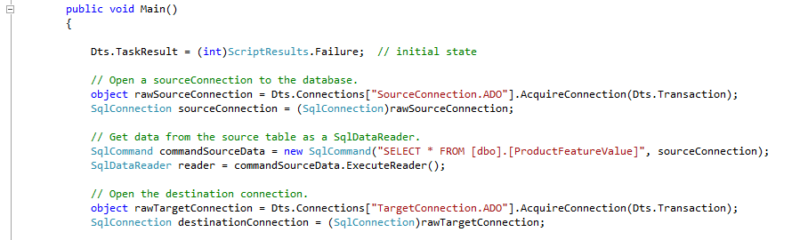

The solution for this is available within the script task. With the .NET framework (from version 2 until now) SqlBulkCopy class, you integrate the same functionality of the command line utility BCP.exe into your integrated service package.

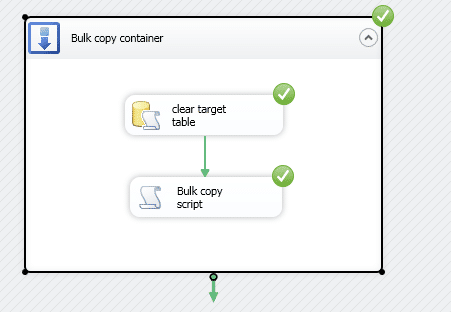

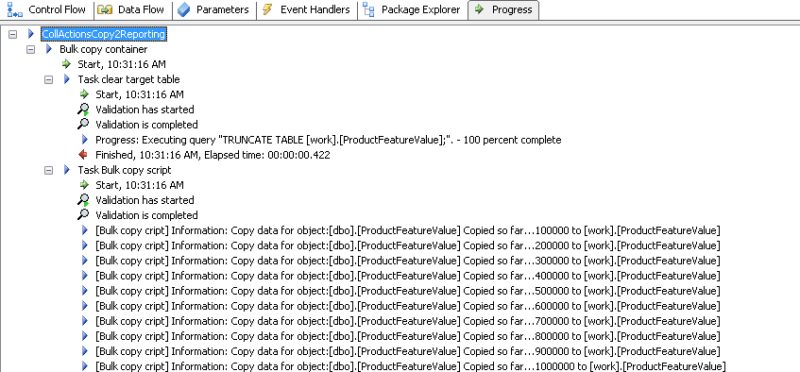

An example:

First, the execute SQL Task ‘clear target table’ clears the target table.

Secondly, the script task ‘Bulk copy script’ task, copies the data.

The notification handler

There are more possibilities and options using this class, that can improve our example. Parameterize the script to build hardcode-free code is possible of course. But this example explains the basics of this class usage.

You can find the SqlBulkCopy class documentation on msdn.

Advantages of this approach is the minimal maintenance effort on these packages, less packages and a big performance improvement. Disadvantages of using it, that depends on your knowledge level, you need some knowledge about scripting to write it. If you know a little of C#.NET, then this is a very small thing to do.

I’m not saying this is the only possibility, neither will I say that it solves all your problems by making only one package for all you copy tasks. But remember it as a possible solution for a lot of cases. Try it out for you case and see if it suits you.

Using this package solution architecture, you can make a dynamic package that copies a bunch of tables for you in no time. You can discover this in a next blog, ‘Dynamic Bulk copy SSIS package‘.

Another example I can give is a complete staging process, that processes a full or incremental load of data into the staging area of the ETL process. That will come up in another blog, ‘Dynamic Bulk copy Staging package.’

© 2023 Kohera

Crafted by

© 2022 Kohera

Crafted by

| Cookie | Duration | Description |

|---|---|---|

| ARRAffinity | session | ARRAffinity cookie is set by Azure app service, and allows the service to choose the right instance established by a user to deliver subsequent requests made by that user. |

| ARRAffinitySameSite | session | This cookie is set by Windows Azure cloud, and is used for load balancing to make sure the visitor page requests are routed to the same server in any browsing session. |

| cookielawinfo-checkbox-advertisement | 1 year | Set by the GDPR Cookie Consent plugin, this cookie records the user consent for the cookies in the "Advertisement" category. |

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| CookieLawInfoConsent | 1 year | CookieYes sets this cookie to record the default button state of the corresponding category and the status of CCPA. It works only in coordination with the primary cookie. |

| elementor | never | The website's WordPress theme uses this cookie. It allows the website owner to implement or change the website's content in real-time. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | Cloudflare set the cookie to support Cloudflare Bot Management. |

| pll_language | 1 year | Polylang sets this cookie to remember the language the user selects when returning to the website and get the language information when unavailable in another way. |

| Cookie | Duration | Description |

|---|---|---|

| _ga | 1 year 1 month 4 days | Google Analytics sets this cookie to calculate visitor, session and campaign data and track site usage for the site's analytics report. The cookie stores information anonymously and assigns a randomly generated number to recognise unique visitors. |

| _ga_* | 1 year 1 month 4 days | Google Analytics sets this cookie to store and count page views. |

| _gat_gtag_UA_* | 1 minute | Google Analytics sets this cookie to store a unique user ID. |

| _gid | 1 day | Google Analytics sets this cookie to store information on how visitors use a website while also creating an analytics report of the website's performance. Some of the collected data includes the number of visitors, their source, and the pages they visit anonymously. |

| ai_session | 30 minutes | This is a unique anonymous session identifier cookie set by Microsoft Application Insights software to gather statistical usage and telemetry data for apps built on the Azure cloud platform. |

| CONSENT | 2 years | YouTube sets this cookie via embedded YouTube videos and registers anonymous statistical data. |

| vuid | 1 year 1 month 4 days | Vimeo installs this cookie to collect tracking information by setting a unique ID to embed videos on the website. |

| Cookie | Duration | Description |

|---|---|---|

| ai_user | 1 year | Microsoft Azure sets this cookie as a unique user identifier cookie, enabling counting of the number of users accessing the application over time. |

| VISITOR_INFO1_LIVE | 5 months 27 days | YouTube sets this cookie to measure bandwidth, determining whether the user gets the new or old player interface. |

| YSC | session | Youtube sets this cookie to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the user's video preferences using embedded YouTube videos. |

| yt-remote-device-id | never | YouTube sets this cookie to store the user's video preferences using embedded YouTube videos. |

| yt.innertube::nextId | never | YouTube sets this cookie to register a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | YouTube sets this cookie to register a unique ID to store data on what videos from YouTube the user has seen. |

| Cookie | Duration | Description |

|---|---|---|

| WFESessionId | session | No description available. |