With Analysis services 2016 and Analysis Services Management Object (AMO) we now have some powerful tooling to automate our cube with, for example, PowerShell. In fact, it’s now even (relatively) easy to retrieve all the measures from a cube in just a few seconds. That’s the example that we will be demonstrating in this blog. In the second part of the blog, we’ll try to do the same with an open Power BI file.

Two important prerequisites:

So, here’s the PowerShell script that will get the measures from a cube (change the first three variables to fit your environment):

[System.Reflection.Assembly]::LoadWithPartialName("Microsoft.AnalysisServices.Tabular");

$tab = "YourSSASserver";

$dbId = "ID_or_DB";

$saveas = "C:\YourFolder\{0}.dax" -f $tab.Replace('\', '_');

$as = New-Object Microsoft.AnalysisServices.Tabular.Server;

$as.Connect($tab);

$db = $as.Databases[$dbId];

# in case you want to search by the name of the cube/db:

# $as.Databases.GetByName("DB Name");

$out = "";

foreach($t in $db.Model.Tables) {

foreach($M in $t.Measures) {

$out += "// Measure defined in table [" + $t.Name + "] //" + "`n";

$out += $M.Name + ":=" + $M.Expression + "`n";

}

}

$as.Disconnect();

$out = $out.Replace("`t"," "); # I prefer spaces over tabs :-)

$out.TrimEnd() | Out-File $saveas;

As you can see, it’s not such a long or complicated script. We connect to the SSAS instance and try to find the database. When this is done, we loop trough the tables and for each table we’re getting the measures that are defined in that table.

Just be warned about the separators (`;` vs `,`) as the settings on the SSAS server might be different than the ones on your local machine.

Also, check out the Analysis Services Cmdlets.

We can now develop a sort like script to get the measures from an open Power BI file (*.pbix files). When you’re working with a Power BI file you’re actually running a local instance of SSAS (hint: look for the process named `msmdsrv.exe`). And you can even connect to it with SQL Server Management Studio (SSMS). The port being used will most likely differ when you restart Power BI.

A colleague of mine pointed out that we’re able to find the port(s) that Power BI is actively using, which is stored in a file named `msmdsrv.port.txt`. The location of the file is where your local app data is stored. In that folder, you will find all open workspaces that Power BI created. More specifically, it’s in this path:

<LocalAppData>\Microsoft\Power BI Desktop\AnalysisServicesWorkspaces\<WorkSpace>\Data

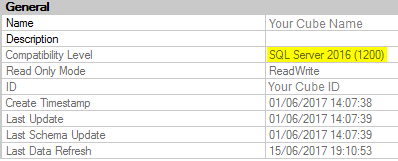

After you connect with SSMS, you’ll be able to browse it like you’re used to, but the name of the database does not reveal the name of the Power BI file:

Now that we have an approach for hacking ourselves into the Power BI model, we can extend the existing PowerShell script and make it work for Power BI as well. This is the link to the full script.

Enjoy it! :-)

Side note: not all the features that are present in the SSAS API will work with Power BI files. For example, processing the cube does not seem to be working. In case you find a workaround for that, I’m happy to hear it.

© 2023 Kohera

Crafted by

© 2022 Kohera

Crafted by

| Cookie | Duration | Description |

|---|---|---|

| ARRAffinity | session | ARRAffinity cookie is set by Azure app service, and allows the service to choose the right instance established by a user to deliver subsequent requests made by that user. |

| ARRAffinitySameSite | session | This cookie is set by Windows Azure cloud, and is used for load balancing to make sure the visitor page requests are routed to the same server in any browsing session. |

| cookielawinfo-checkbox-advertisement | 1 year | Set by the GDPR Cookie Consent plugin, this cookie records the user consent for the cookies in the "Advertisement" category. |

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| CookieLawInfoConsent | 1 year | CookieYes sets this cookie to record the default button state of the corresponding category and the status of CCPA. It works only in coordination with the primary cookie. |

| elementor | never | The website's WordPress theme uses this cookie. It allows the website owner to implement or change the website's content in real-time. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | Cloudflare set the cookie to support Cloudflare Bot Management. |

| pll_language | 1 year | Polylang sets this cookie to remember the language the user selects when returning to the website and get the language information when unavailable in another way. |

| Cookie | Duration | Description |

|---|---|---|

| _ga | 1 year 1 month 4 days | Google Analytics sets this cookie to calculate visitor, session and campaign data and track site usage for the site's analytics report. The cookie stores information anonymously and assigns a randomly generated number to recognise unique visitors. |

| _ga_* | 1 year 1 month 4 days | Google Analytics sets this cookie to store and count page views. |

| _gat_gtag_UA_* | 1 minute | Google Analytics sets this cookie to store a unique user ID. |

| _gid | 1 day | Google Analytics sets this cookie to store information on how visitors use a website while also creating an analytics report of the website's performance. Some of the collected data includes the number of visitors, their source, and the pages they visit anonymously. |

| ai_session | 30 minutes | This is a unique anonymous session identifier cookie set by Microsoft Application Insights software to gather statistical usage and telemetry data for apps built on the Azure cloud platform. |

| CONSENT | 2 years | YouTube sets this cookie via embedded YouTube videos and registers anonymous statistical data. |

| vuid | 1 year 1 month 4 days | Vimeo installs this cookie to collect tracking information by setting a unique ID to embed videos on the website. |

| Cookie | Duration | Description |

|---|---|---|

| ai_user | 1 year | Microsoft Azure sets this cookie as a unique user identifier cookie, enabling counting of the number of users accessing the application over time. |

| VISITOR_INFO1_LIVE | 5 months 27 days | YouTube sets this cookie to measure bandwidth, determining whether the user gets the new or old player interface. |

| YSC | session | Youtube sets this cookie to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the user's video preferences using embedded YouTube videos. |

| yt-remote-device-id | never | YouTube sets this cookie to store the user's video preferences using embedded YouTube videos. |

| yt.innertube::nextId | never | YouTube sets this cookie to register a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | YouTube sets this cookie to register a unique ID to store data on what videos from YouTube the user has seen. |

| Cookie | Duration | Description |

|---|---|---|

| WFESessionId | session | No description available. |